Hiatus

/I think I need to stop running my mouth so damn much about what I’m doing. I think most people working in experimental electronica love having these conversations, myself included, but what I think is coming off as just sharing information about what I’ve been up to comes off a lot of times as seeking a solution for something or maybe even whining.

I had this conversation the other night about issues with DPC latency I’ve been experiencing with my machine recently. For those who don’t know, DPC latency is the enemy of music production; in layman’s terms, it means processes on your machine can block tasks related to the processing of real-time audio leading to dropouts, crackling and all kind of performance issues with your DAW. This is pretty much a PC specific issue because of driver diversity (issue usually surfaces as a result of poorly written/unoptimized drivers) - often times its drivers that have nothing to do with audio (ahem NVIDIA) causing the issue. The music production crowd for PC is a niche market so DPC latency isn’t really prioritized in most off the shelf builds. Music production is really a Mac thing.

I was just talking about this in terms of what I’ve been working on and why I haven’t been playing for a few weeks, but the conversation turned to me dumping my codebase in favor of an entirely new solution in relatively short order. I actually find that this sort of response is common, which is unfortunate because I’m never looking for another solution, just running my mouth.

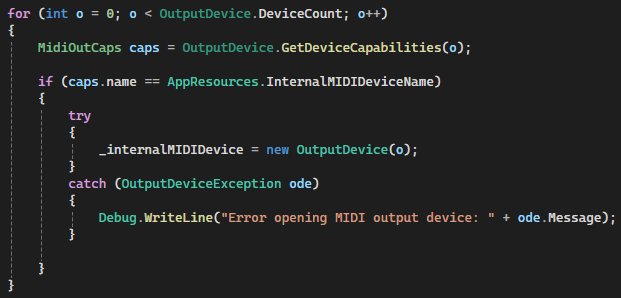

I like figuring things out myself, it’s just my personality. I have a codebase that does exactly what I want it to do and can be easily modified/extended. I can do anything in MIDI that I can think of from code. However, my solution is built on top of VST .NET, which makes it PC specific. I would certainly consider a version of my plugin that would work on a Mac (e.g. a port to C++ or another framework like NPlug or JUCE) , but there is no scenario where I have a totally different solution that doesn’t involve the Aleator at all. It’s crazy how often people suggest that to me. Even a rewrite would mean a LOT of time spent developing when getting better at making music is my immediate goal. A lot of people in the experimental space are hyper focused on new tech or gear which has never really been my thing. If you’re a really good guitarist you’re not always chasing new guitars. I’m hyper focused on generatively rendering music that sounds cool. All of the new gear, tech and processes in the world are worthless if they don’t make your music sound better or really expand your palette.

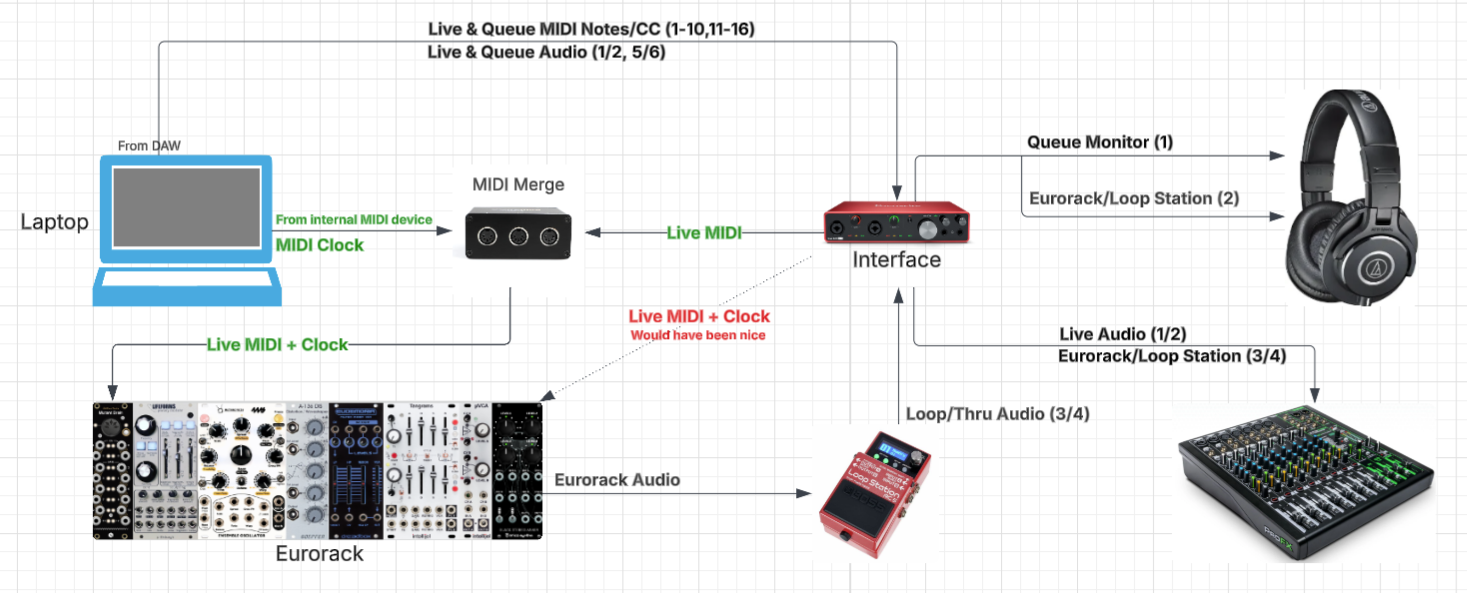

What I want to do is generatively flesh out loosely defined harmonic structures from code with Eurorack as an extension of whatever I have loaded locally in the DAW. If I ever perfect that process and get too good or comfortable with it, maybe I’ll pivot at that point. It seems unlikely that will ever happen though. There is always some nuance I’m looking to add via code. The next time I’m in a dev cycle I’ll be looking to add some kind of snare scattering functionality. I could probably get a very similar effect by using a delay, but I want to do it programmatically and be able to trigger it in the Aleator UI. One thing is for sure - my code is the art, just as the music is. The idea of passing the generative component of what I do onto AI or some other application/component is antithetical to SERIES; I do the work and I do it manually. I would sit all this shit down and go back to playing guitar on the couch before I farm the algorithmic/generative work out to a device.

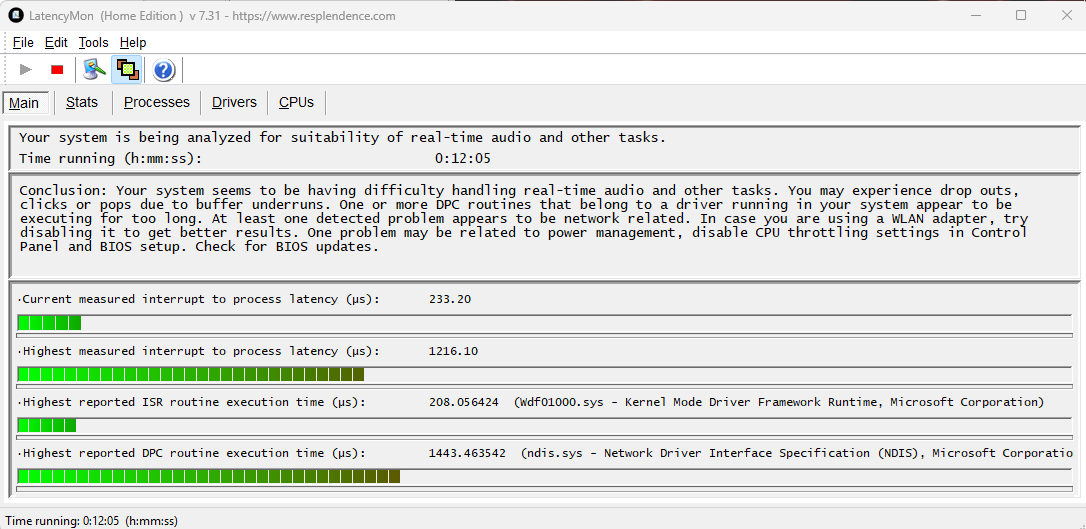

Back to the DPC latency. After a recent Windows update, I noticed crazy DPC latency to the point that my DAW seemed to be useless for a few days. I was suddenly getting crackles and dropouts that made using Reaper unbearable. At some point, that egregious subsided a bit but I can still hear intermittent crackling and LatencyMon doesn’t look great:

Boooo.

This Alienware model is 6 years old and was never a fan favorite. I generally can’t run with a buffer less than 512 or else I risk underrun. So I broke down and bought a Rok Box from PC Audio Labs. These are dedicated DAWs, speced for audio and tested for DPC latency. I’m confident that my sets will be a lot better going forward and my environment will be much more stable.

Machine gets here tomorrow, it will take a bit of time to set it up. Hopefully I will be in shape to test things out at ElectroSonicWorkshop on August 27th.